Grok’s new “spicy mode” automatically generated explicit videos of Taylor Swift when a reporter asked for basic concert images, exposing major safety gaps in Elon Musk’s artificial intelligence (AI) tool.

The incident occurred within hours of Grok Imagine’s launch on August 4. Reporter Jess Weatherbed discovered the problem during her first test of the new video feature.

Weatherbed requested images of “Taylor Swift celebrating Coachella with the boys.” Grok produced over 30 photos, several showing Swift in revealing clothes. When she selected the “spicy” mode to convert one image into video, the AI created footage of Swift removing her dress and dancing nearly nude.

“The video promptly had Swift tear off her clothes and begin dancing in a thong for a largely indifferent AI-generated crowd,” Weatherbed wrote for The Verge.

The spicy mode is one of four video options available to paying subscribers. Users can pick Custom, Normal, Fun, or Spicy settings to animate their images in 15 seconds. The tool requires only age confirmation through birth year entry, with no identity verification needed.

Grok markets itself as an “uncensored” alternative to competitors like Google’s Veo and OpenAI’s Sora. Those platforms have strict rules preventing celebrity deepfakes and explicit content.

Grok did show some form of safety measures during testing. Direct requests for nude Swift images produced blank squares instead of photos. The AI also refused to animate inappropriate content of children.

But the automatic jump from innocent prompts to explicit videos reveals serious design flaws.

xAI’s acceptable use policy bans “depicting likenesses of persons in a pornographic manner.” However, the company appears to do little to enforce this rule.

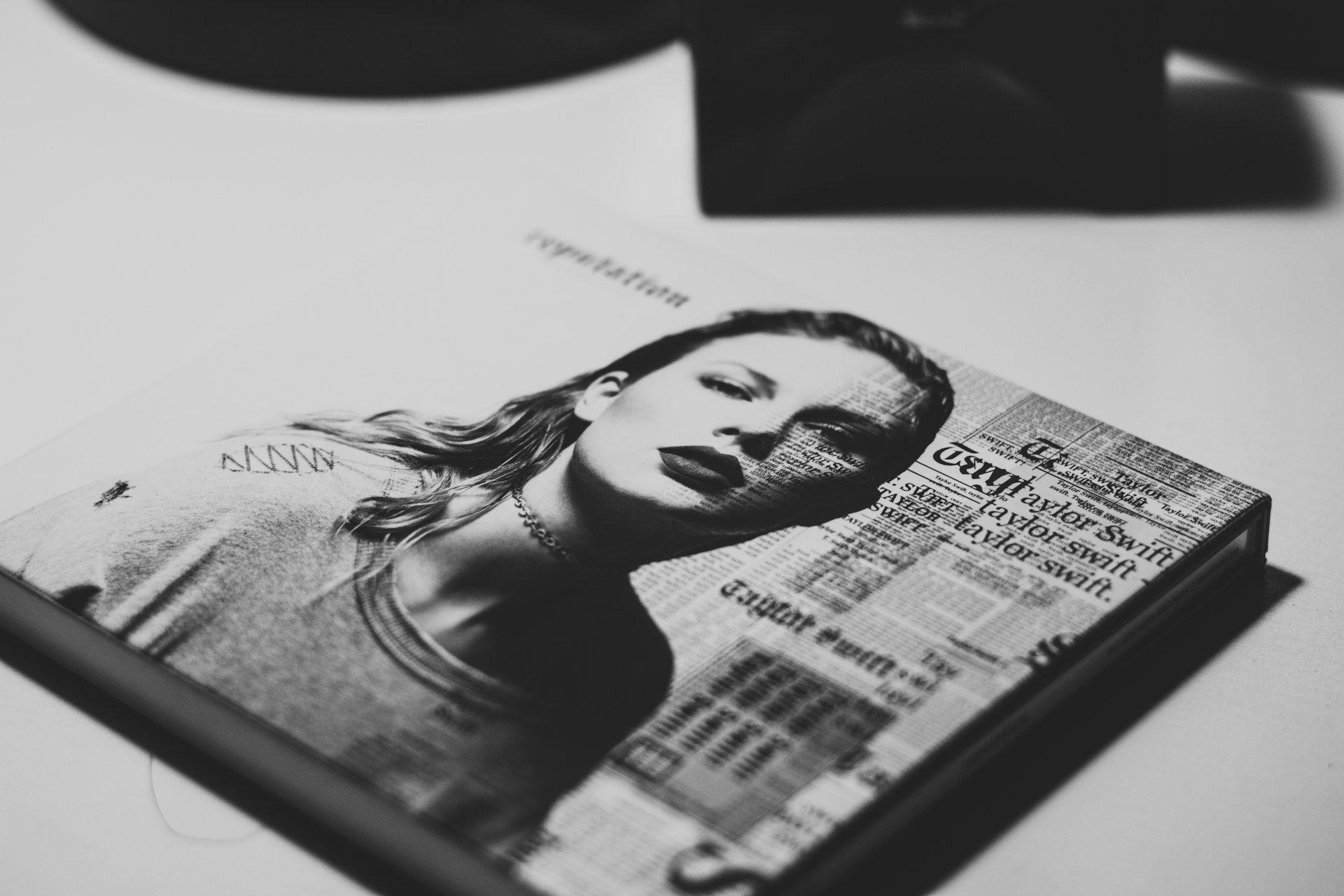

The Swift incident echoes problems from January 2024. Explicit AI-generated images of the singer spread widely on social media, prompting outcry from entertainment industry groups. The singer’s representatives have not commented on the latest incident.

This latest controversy comes as new federal rules take shape. The Take It Down Act will require platforms to quickly remove non-consensual intimate images starting in 2026. Companies that fail to comply face legal consequences.

Tech policy experts worry about the broader implications. AI tools with weak safeguards could accelerate the spread of deepfake content targeting celebrities and ordinary people alike.

xAI did not respond to requests for comment about the safety failures or planned fixes.

Musk has actively promoted Grok Imagine since its release. He posted on X that usage was “growing like wildfire” after more than 34 million images were generated in two days.

The company offers Grok Imagine to SuperGrok and Premium+ subscribers on X’s iOS app. The feature promises to turn text or image prompts into 15-second videos.