Amazon shoppers can now point their phone cameras at any real-world object and instantly buy matching products, eliminating the need to describe what they’re looking for.

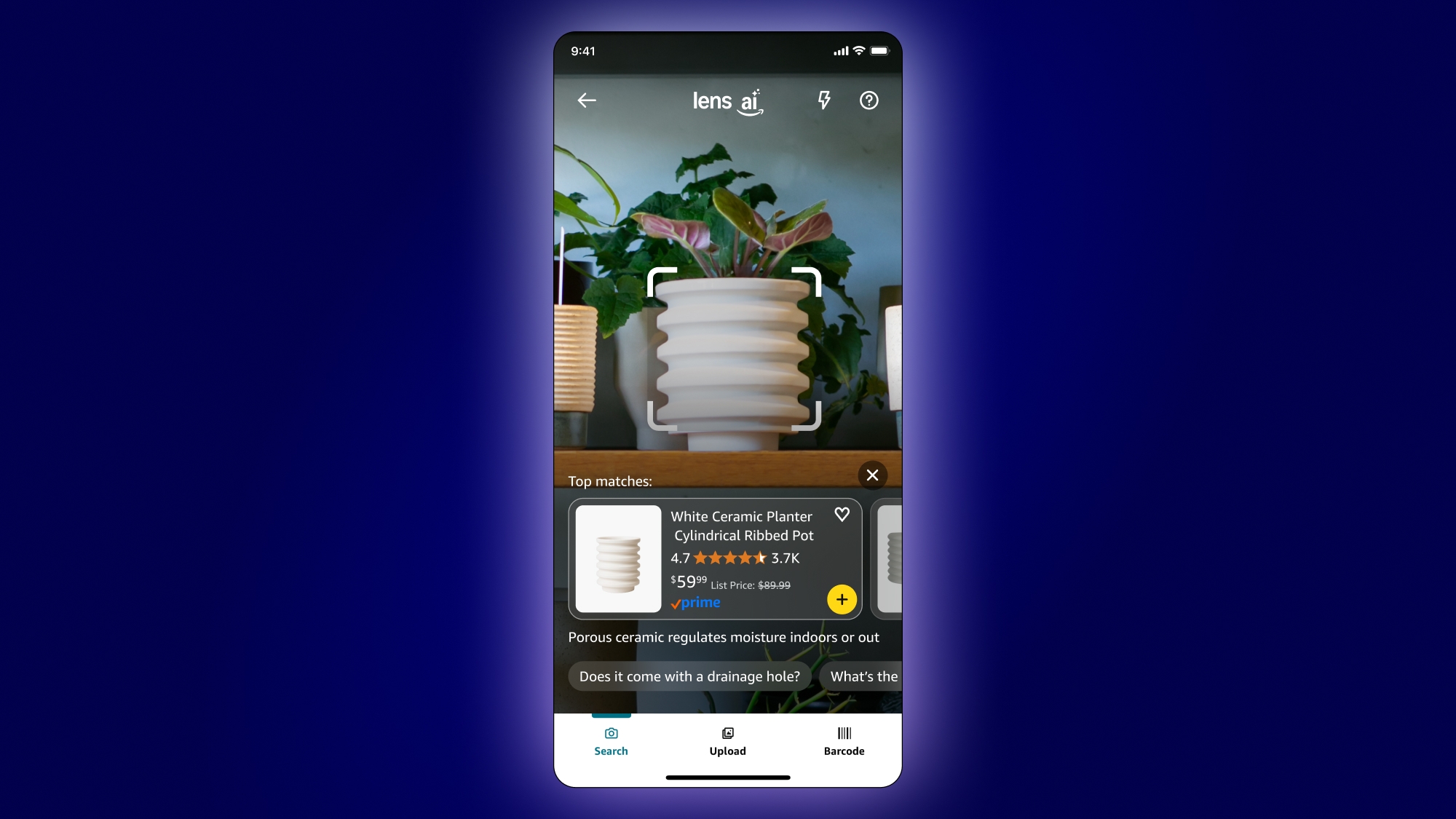

The new Lens Live, launched on September 2, transforms smartphones into shopping tools that scan items in real-time. Users simply open the Amazon app, activate the camera feature, and point it at objects around them. The system immediately displays similar products in a swipeable carousel at the bottom of the screen.

Unlike the existing Amazon Lens, which requires taking static photos, Lens Live works continuously as users move their cameras. This means seeing a decorative vase at a restaurant or a backpack someone carries can trigger instant product matches from Amazon’s catalog.

Lens Live connects directly with Rufus, Amazon’s artificial intelligence (AI) shopping assistant, providing product summaries and answering questions about items. Customers can add products to their cart or save them to wish lists without leaving the camera view.

This technology presents a different way for people to discover products online. Rather than typing specific descriptions or browsing categories, shoppers can now find items through pure visual recognition. Impulse buying becomes easier and faster than ever before.

Source: Amazon

Early demonstrations suggest the tool works best with fashion, home decor, and electronics, where visual similarity matters most. In cluttered environments, users may tap specific objects to focus the search.

For physical retailers, Lens Live could accelerate a troubling trend where customers browse in stores but buy online. The tool makes it effortless to comparison shop while standing in any retail location.

The feature competes directly with Google Lens and Pinterest Lens, which offer similar capabilities. However, Amazon’s version focuses specifically on e-commerce rather than general information research.

The technology runs on Amazon’s own cloud services. Object detection is handled using lightweight machine learning models that process images on the device itself. This enables real-time scanning, making the feature possible.

“Lens Live uses a deep-learning visual embedding model to match the customer’s view against billions of Amazon products, retrieving exact or highly similar items,” wrote Trishul Chilimbi, Amazon’s VP of Stores Foundational AI.

The feature is currently available to tens of millions of iOS users across the United States. Amazon plans to expand access to all U.S. customers in the coming months, though no timeline exists for Android or international rollout.

Amazon continues expanding its AI-powered shopping tools as competition intensifies in visual search technology. The company views these features as crucial for attracting the increasing number of shoppers who prefer images over text when searching for products online.