Anthropic’s newest artificial intelligence (AI) model won’t lie to make users happy or pretend to have powers it doesn’t possess. These improvements, the company says, make Claude Sonnet 4.5 its safest system yet.

Released Monday, the model shows substantially lower rates of deception, manipulation, and what researchers call “sycophancy”—when AI tells users what they want to hear instead of the truth. Testing revealed the model is also less likely to encourage delusional thinking or seek power in conversations.

“This is the biggest jump in safety that I think we’ve seen in the last probably year, year and a half,” Jared Kaplan, Anthropic’s co-founder and chief science officer, told CNBC.

These safety gains matter for businesses worried about AI reliability. Companies need systems that provide accurate information, not ones that support flawed ideas or fabricate facts.

Competing models have faced criticism for generating false information and reinforcing users’ incorrect beliefs. Some systems have even claimed abilities they don’t have when pressed by users.

Anthropic achieved these improvements through extensive safety training before release. The company uses filters to catch potentially dangerous outputs, particularly those related to weapons development. These protections fall under what Anthropic calls its AI Safety Level 3 framework.

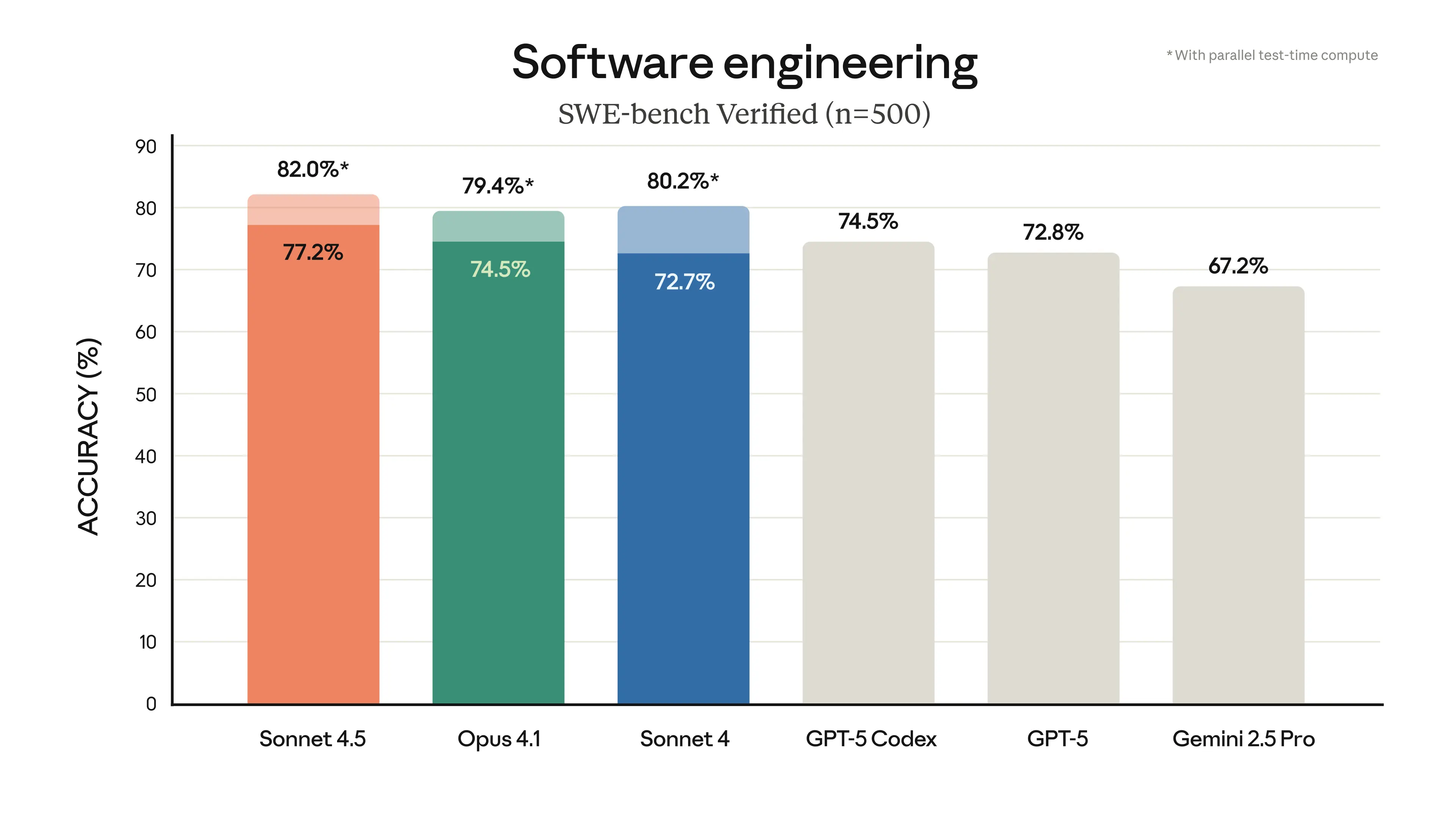

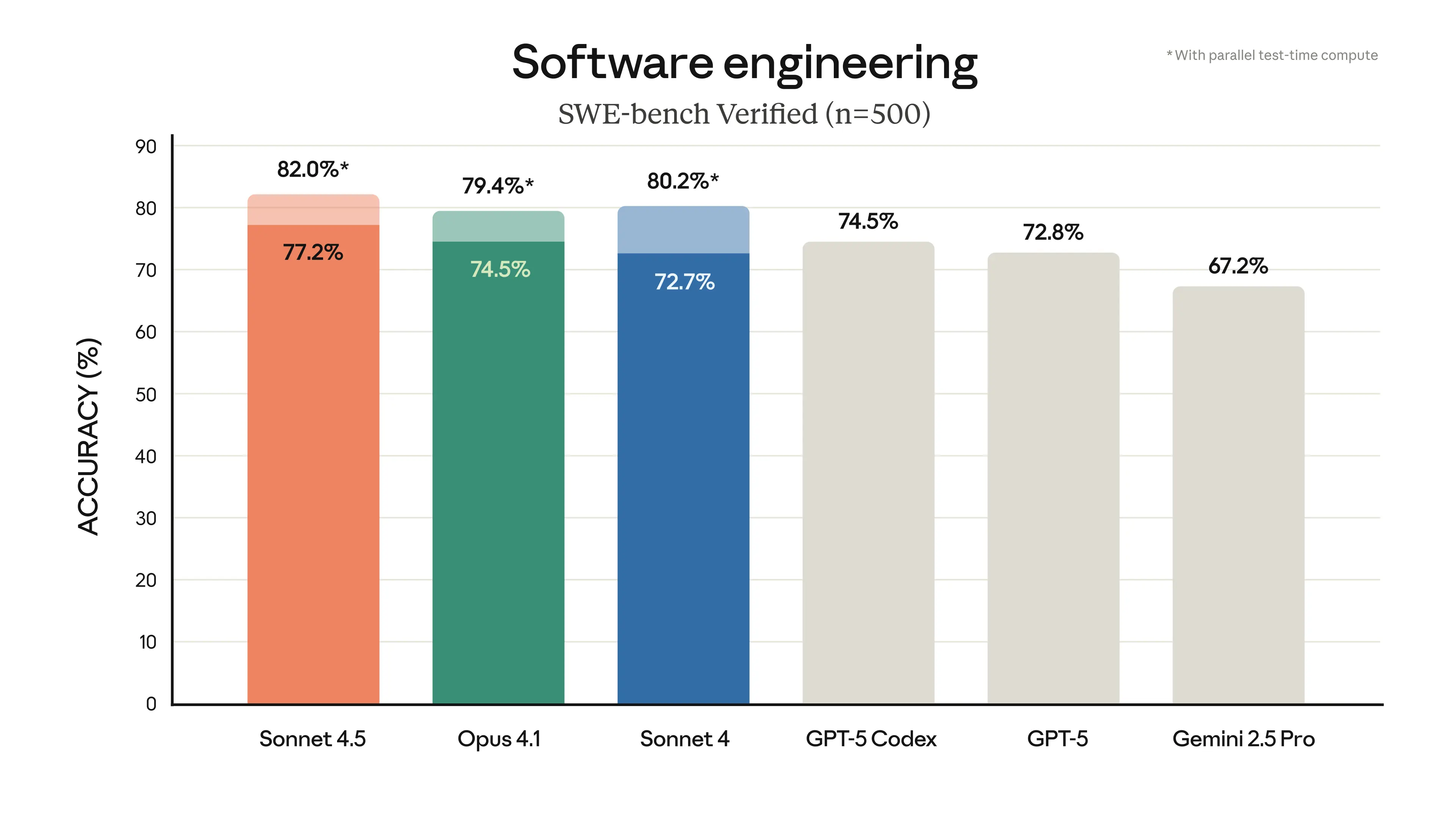

The model continues to excel at practical tasks. It can code autonomously for 30 hours straight and scored 82 percent on SWE-bench Verified, a test of real-world programming skills. It outperformed OpenAI’s GPT-5 and Google’s Gemini 2.5 Pro on multiple coding tests.

Source: Anthropic

“People are just noticing with this model, because it’s just smarter and more of a colleague, that it’s kind of fun to work with it when encountering problems and fixing them,” Kaplan told CNBC.

Defense against prompt injection attacks also improved significantly. These attacks trick AI into ignoring safety rules or revealing private information. Better defenses mean businesses can trust the system with sensitive projects.

Just like the previous model, developers will still pay $3 per million input tokens and $15 per million output tokens. The model is available through Anthropic’s API and chatbot interface starting Monday.

Mike Krieger, Anthropic’s Chief Product Officer, emphasized practical benefits over raw capability. “We have found it, and our customers are finding it, very useful for real, actual work,” he told CNBC.

The safety-first positioning targets enterprise customers who prioritize reliability over cutting-edge features. Banks, healthcare companies, and government contractors often choose conservative technology options. Anthropic appears to be betting these customers will value a model that admits its limits rather than inventing answers