The Chips

A system-on-a-chip contains various components, including the C, G, memory controller, image signal processor, and more. The Tegra X1 uses 64-bit ARMv8 processing cores, just like the second version of the Tegra K1. The first version of the K1 had 32-bit ARMv7 cores. However, Nvidia went with standard ARM reference cores. Instead of its custom Denver Chip cores as implemented in the second revision of the Tegra K1. That’s the chip that powers the Nexus 9.

Nvidia paid a pretty penny to ARM for an instruction set license. That enabled it to build the custom 64-bit Denver Chip, so why stop using it so soon? According to Nvidia, this is all part of an Intel-style tick-tock hardware strategy. The last Tegra K1 was powered by two Denver C cores; it produced a 28nm manufacturing process. A measurement of the relative size of the features on the chip. With Tegra X1, Nvidia wanted to move to a more minor chip process, in this case, 20nm. The more minor manufacturing process allows for more transistors on the chip. And less power use at a given level of performance. Thermal design power, the TD, is crucial when you’re talking about mobile devices.

We suspect Nvidia didn’t use its own Chip core design. Because the Denver architecture isn’t yet ready for the 20nm process. The next version of Tegra is code-named Worker, it may feature the follow-on to the Denver C cores. But no one knows exactly what Nvidia is up to now. Previous roadmaps pointed to a 16nm process being adopted for Worker, but it sounds like that has been pushed back.

Change the Variants

This is actually the same thing Nvidia did last year with the K1. It first got a quad-core chip using standard ARM Chip cores, a Kepler G, the chip in the Shield Tablet. A few months later, the 64-bit Denver cores were paired up with Kepler in a newer version of the K1. That’s the chip in the Nexus 9. So Nvidia has promised that a 20nm Denver variant will become a future version of the X1. Still, for the time being, we’re looking at perfectly capable but standard licensed ARM cores.

The Tegra X1 is packing four Cortex-A57 cores, the big cores, four Cortex-A53 cores, and the little cores. The big cores are fast and use more power. But the little cores are great for background processing and are much more power-efficient. Most chips that use this eight-core configuration are tied together using a system from ARM called big TT. The newest version of this technology moves data between the two with global task scheduling. With the latest version of global task scheduling, sometimes called heterogeneous multi-processing. You can get any mix of the eight big and little cores.

The Method

Rather than using ARM’s method for controlling all eight cores. Nvidia uses cluster migration with a custom cache coherence system to shuffle data between the two. Under this model, the OS scheduler only sees one big or small cluster at a time. So what does all that mean? The Tegra X1 only runs processes on one set of cores simultaneously. Still, the data can be moved back and forth between the big power-hungry cores and the small power-efficient cores. Cluster migration is typically less efficient than global task scheduling. Still, Nvidia says its custom interconnect has vastly improved the power efficiency of cluster management.

This isn’t entirely new territory for Nvidia. It was one of the first SoC makers. To devise a system using a low-power core in conjunction with traditional full-power C cores. The company initially called its design the companion core before renaming it to the much more boring 4–1. This tech debuted in the Tegra 3, which paired a single low-speed Cortex-A9 with four standard A9s. Nvidia’s engineers have probably learned much about ARM cores in the last few years. Hopefully, this custom multi-processing setup is better.

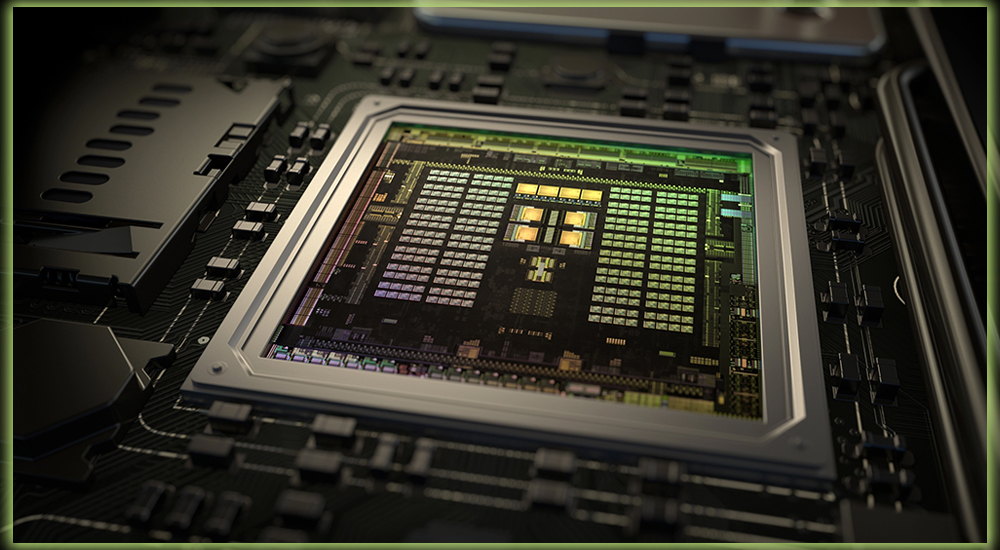

The GPU

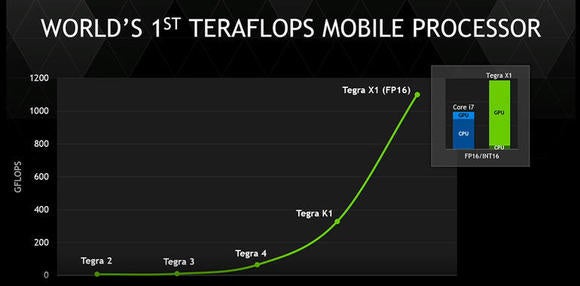

Along with the new 64-bit C cores, Tegra X1 packs a brand new G based on its Maxwell architecture. Nvidia has used its desktop GPU architecture in Tegra before. The Tegra K1 implemented a version of Kepler two years after the first desktop reference cards were out. Maxwell is arriving on mobile in half the time. Nvidia says this is thanks to a focus on mobile within the company. Kepler was designed for desktops and then ported to mobile SoCs. On the other hand, Maxwell was designed from the ground up with a portable implementation in mind.

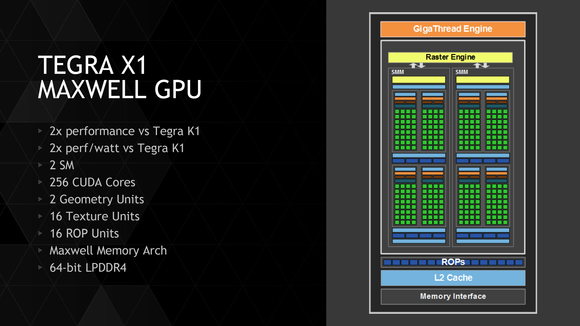

Nvidia’s use of licensed ARM C cores. Makes the element of the Tegra X1 very similar to other mobile processors released in 2015. The GPU has to be the differentiator; Nvidia knows it. Maxwell on mobile supports Unreal Engine 4, DirectX 12, OpenG4.5, CUDA, and Open GES 3.1. The Maxwell desktop parts were lauded for their high power efficiency, which carries over to the mobile version. A quick look at the specs of the X1’s G shows it’s a giant leap over that of the K1. Tegra X1’s G has far more CUDA cores, 256 vs. 192. Two geometry units instead of one, and 16 texture units. Up from 8 in the K1 to 16 RO, up from only 4. A big jump in memory bandwidth to 25.6 GB/s, up from 17.

Compression

There are a few design changes that account for all the gains. But right at the top of the list is the move to 20nm process technology for the G. The X1 also moves to DDR4 memory and employs a new type of end-to-end memory compression. Which allows Nvidia to stick with a memory bus width of 64-bits. This should reduce performance bottlenecks due to memory bandwidth, a common issue with mobile Gs.

The 256 CUDA cores in Nvidia’s mobile Maxwell are impressive. But that’s still just a fraction of what you’ll find in a desktop Maxwell design. However, Tegra X1 has one feature those more powerful chips don’t. Tegra X1’s CUDA cores can accelerate some floating point operations by a wide margin, specifically the low-precision F6 operations. Maxwell only features F2 and F4 CUDA cores. On Kepler, F6 operations were run alone on an F2 core, which wasted some capacity. Maxwell in the X1 can fuse two F6 operations of the same type. I.e., addition, subtraction, and so on, and run them together on a single F2 core. Android display drivers and game engines heavily use F6 operations, which could help.

Efficiency Implementations

When Nvidia announced the Tegra X1. It talked up the chip’s efficiency, lauding the process improvements of the new GPU tweaks mentioned above. But how efficient is it? We were told that Tegra X1’s peak power consumption is 10 watts when rendering an Unreal Engine 4 demo. That’s certainly impressive when you consider the Xbox needs 100 watts to do the same thing. However, 10 watts is still way too much for a tablet. Most processors consume maybe half that at the top end.

The Tegra X1’s power envelope speaks to the wide variety of applications Nvidia envisions. While the 10 number is awe-inspiring in one context, it doesn’t tell the whole story. According to Nvidia, the power consumption in a tablet powered by Tegra X1 will be on par with Tegra K1. In fact, idle power consumption will be even lower, thanks to the various architectural improvements. Tegra K1 was designed to operate at around 5-8 watts. With infrequent peaks up to 11 watts, when running stressful benchmarks. So the X1 will be well within the realm of tablet power requirements.

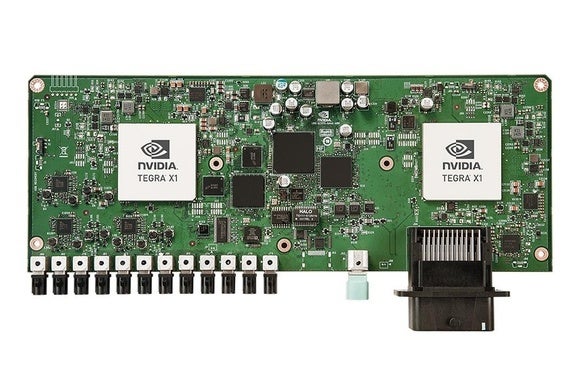

There are two official Tegra X1 implementations, neither for phones nor tablets. Instead, they’re part of Nvidia’s new DRIVE platform. The DRIVE is a system for fully automated self-driving cars. It’s powered by two Tegra X1 chips that can accept live feeds from up to 12 cameras. The more modest DRIVE CX is a single Tegra X1 chip for infotainment systems. Capable of driving high-resolution displays and digital instrument clusters. The DRIVE applications are at the high end of what Tegra X1 can do.

The Power

It will also find its way into tablet-embedded systems like the Tegra K1. In those situations, it will need more than 10 watts of juice. Still, even at power levels similar to other SoCs, the Tegra X1 will be considerably faster. Early benchmarks suggest the X1 will best suit the Snapdragon 810 by about 15%. But benchmarks only tell part of the story. While impressive on paper, Tegra chips have needed help getting traction in the market.

Only a handful of devices ran the Tegra K1, both variants. One of the most notable was made by Nvidia, the Shield Tablet. The experimental project Tango tablet also runs a Tegra K1, but its most prominent use is in the Nexus 9. Is Tegra X1 going to be any different? It’s hard to say, but the DRIVE platform will at least ensure. There will be more in-house applications for Tegra in the future. A next-generation Shield Portable or Tablet seems like a safe bet, too

Even if Tegra X1 does find its way into more tablets embedded in devices this time. You’ll see a lot more of the Snapdragon 810. That chip is fully compatible with Qualcomm’s market-leading E modems, and the power envelope is better optimized for phones. A Tegra X1 will probably be restricted to tablets that can handle a higher wattage chip. Still, it will be fantastic for gaming with that Maxwell-based GPU.