DeepNude is an AI nudifier that uses machine learning to create fake nude images from regular photos—often without consent. Marketed at first as an adult novelty, the app quickly became a warning sign for how AI can be misused. It’s now linked to major issues like deepfake porn, privacy violation, and nonconsensual imagery.

What makes DeepNude especially dangerous is how easy it is to use. With just one photo upload, the software creates AI-generated nudes in seconds. As these tools evolve and spread, they fuel growing concerns about digital safety, nonconsensual deepfakes, and the legal gray areas surrounding image-based abuse. The rise of this tech forces a tough question: how do we protect people when powerful AI tools can strip away their privacy so quickly?

How DeepNude Works

DeepNude technology uses artificial intelligence to turn clothed photos into fake nude images that look disturbingly real. It relies on GANs—generative adversarial networks—where one neural network creates the image while another checks how real it looks. This back-and-forth helps the system get better with each fake it makes.

These deepfake generators are trained on large image sets, mixing clothed and nude photos. By spotting patterns, the AI learns to guess body shapes and skin textures under clothing. Many versions use open-source code, making them easy for hobbyists and amateur coders to copy or tweak.

Unlike deepfake video tools that need editing skills, DeepNude-style apps are built for speed. Just upload a photo and get an AI-generated nude in seconds—no coding or AI knowledge required. That simplicity is exactly what makes them so risky. Anyone can create synthetic nudes, often without understanding the harm or legal trouble that can follow.

Here’s how the process typically works:

- Uploading a Clothed Photo

The process starts when a user uploads a regular image of someone fully clothed. Most AI-generated nudes apps accept common image formats and require no technical setup. - AI Predicts the Body Under Clothing

Using trained models built on datasets of nude and clothed images, the software estimates the body shape and skin beneath the clothing. This is done through machine learning, often using GANs or diffusion models. - Instant Output of a Fake Nude Image

Within seconds, the tool delivers a synthetic nude. No need for manual editing or user input—the fake is generated automatically. - Powered by Open-Source Deepfake Tools

Many nudification apps run on open-source AI frameworks. These models are easy to copy, customize, and share—giving almost anyone access to powerful deepfake tools with little technical skill.

Even though these tools may look like harmless tech demos, they enable serious privacy violations. With just one image, users can create nonconsensual deepfakes—turning ordinary photos into synthetic nudes in seconds.

The Origin and Shutdown of the DeepNude App

The DeepNude app launched in 2019. It was created by a developer named Alberto, along with a small anonymous team. The tool was shared through its own website and quickly got attention. Users could upload a photo of a clothed woman and get an AI-generated nude in seconds.

Even with a basic interface, the app spread fast. It went viral on Reddit, Twitter, and other forums, mostly out of curiosity—but that didn’t last long.

Users began cloning the app, and it became clear the software could fuel widespread privacy violations and nonconsensual imagery. Facing pressure and recognizing the risks, Alberto shut it down, stating that “the probability that people will misuse it is too high.”

Although the original DeepNude tool is no longer online, its brief existence sparked a wave of imitators. Many of these deepfake clone apps still operate today, often in legal gray areas—continuing the cycle of AI-generated abuse with little oversight.

Types of DeepNude Tools and Variations

Since DeepNude was taken offline, dozens of new nudification tools have surfaced. Most follow the same model: use AI to turn clothed photos into fake nude images. While some use different names, their function is nearly identical. These tools range from basic apps to advanced bots and prompt-based platforms.

Here are the main types of DeepNude-style tools available today:

- Static photo nudifiers: These are simple upload tools. Users send in one photo and get an AI-generated nude version back in seconds.

- Prompt-based AI nude generators: Platforms like Stable Diffusion or PlaygroundAI allow users to enter NSFW prompts that result in nude images. These prompts often twist or blur content rules.

- DeepNude clones and forks: Even though the original app is gone, copies still float around online. Many are downloadable from forums or sketchy websites and come with few restrictions.

- Telegram bots and NSFW APIs: Some users skip apps entirely. Instead, they send photos to bots on Telegram and get fake nudes in return, powered by fast, automated APIs.

- Anime or hentai undress AI tools: Built for anime fans, these platforms create nude versions of animated characters. Many are disguised as safe tools but produce NSFW content through hidden prompts.

Together, these DeepNude alternatives show how fast the undress AI space is growing. Most are anonymous, and easy to access.

Why DeepNude Technology Is So Dangerous

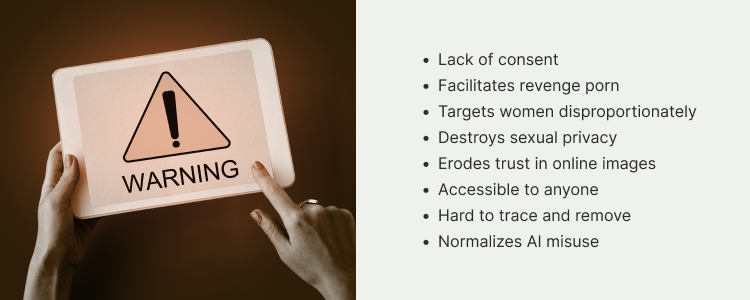

DeepNude and similar AI tools pose serious risks—not just because of what they create, but how easily they do it. With almost no skill required, anyone can generate fake nude images and cause real-world harm.

Here are the key reasons why DeepNude technology is so dangerous:

- No consent involved

Victims never agree to be part of these images. DeepNude-style tools ignore digital autonomy and violate personal boundaries. - Used for revenge porn

These apps are often used to target ex-partners. Fake nudes are created to shame, blackmail, or humiliate, turning AI into a tool for harassment. - Targets women the most

Most fake nudes AI tools are designed to undress women. This reinforces gender-based abuse and puts women at greater risk of digital exploitation. - Destroys sexual privacy

Even if the image is fake, the emotional and reputational damage is real. Victims often feel exposed, powerless, and violated. - Erodes trust in images

As AI-generated content becomes more realistic, it’s harder to tell what’s real. This impacts journalism, legal cases, and everyday digital interactions. - Easy for anyone to access

Many fake nude generators are free or cheap. Teens, trolls, and bad actors around the world can use them with no oversight. - Almost impossible to remove

Once shared, a fake nude can spread across platforms. Even if deleted from one site, copies can resurface again and again. - Normalizes AI abuse

These apps create a culture where using AI to exploit others is seen as entertainment or curiosity, rather than a serious violation.

DeepNude isn’t just a one-time issue. It represents a growing threat to sexual privacy, online safety, and ethical AI use.

The Psychological and Social Impact on Victims

For victims of AI-generated abuse, the emotional damage is real—even if the image is fake. DeepNude-style tools create nonconsensual deepfakes that strip people of control over their own likeness. Just knowing that your face or body has been altered and sexualized without consent can trigger deep shame, anxiety, and trauma.

These fake nudes often spread fast—shared on social media, private chats, and forums. Victims may feel powerless as the content circulates beyond their control. The threat doesn’t stop at humiliation. Careers, reputations, and personal relationships can all be damaged by a single viral image.

Women are hit hardest. Their bodies are the main target in most AI nude generators, making them more vulnerable to harassment, stalking, and long-term emotional distress.

The psychological toll can be lasting. Victims report symptoms of depression, PTSD, and constant fear of being watched or exposed again. In many cases, proving the image is fake is difficult. That makes it even harder to take legal action or get support—leaving victims stuck between the truth and the public’s belief.

DeepNude vs. Deepfake: What’s the Difference?

Format

- DeepNude: Still images

- Deepfake: Videos

Manipulation Method

- DeepNude: Removes clothing from a single photo using AI prediction

- Deepfake: Swaps faces or voices in existing video or audio footage

Common Uses

- DeepNude: Fake nude images, nonconsensual porn, targeted harassment

- Deepfake: Celebrity porn, political disinformation, impersonation scams

Harm Potential

- DeepNude: Directly targets individuals (mainly women) for sexual exploitation

- Deepfake: Can be used for misinformation, fraud, and blackmail

Legal Treatment

- DeepNude: Often prosecuted under revenge porn or harassment laws

- Deepfake: In some regions, addressed by deepfake-specific legislation

Legal Responses and Challenges

Laws around the world are struggling to keep up with tools like DeepNude. These nudification apps operate in fast-moving legal gray zones. While some countries have laws against revenge porn or image-based sexual abuse, most weren’t built to handle AI-generated fakes.

In the United States, protections depend on the state. A few states have updated revenge porn laws to include AI-generated nudes or deepfakes. But there’s no federal law that directly targets nudification tools.

The UK has made progress with the Online Safety Act and similar laws aimed at synthetic sexual content. Still, enforcement is inconsistent. In the EU, lawmakers are pushing forward with the AI Act—a broader effort to regulate artificial intelligence. But current rules often fall short when it comes to specific threats like DeepNude-style apps.

The legal system faces a tough challenge: protect people from digital harm without stalling AI progress. Until the laws catch up, victims of AI-generated abuse are left with limited options and unclear paths to justice.

Ethical Problems with DeepNude and Similar AI Tools

DeepNude isn’t just a legal issue—it’s an ethical one. These tools reveal serious flaws in how AI is built, used, and accepted online. They don’t just misuse technology; they ignore core values like consent, privacy, and responsibility.

Here are the biggest ethical concerns:

- No consent at all

Victims are digitally stripped without permission. There’s no opt-in—just image-based exploitation. This erases basic personal autonomy. - Tech doesn’t equal right

The fact that AI can create fake nudes doesn’t mean it should. DeepNude shows what happens when developers skip moral responsibility in favor of shock value or profit. - Violation of sexual privacy

These tools turn personal photos into sexualized fakes. That crosses serious boundaries—especially when victims don’t even know it’s happening. - Shared responsibility

Both developers and users are part of the problem. Developers need to build with safeguards. Users need to understand that using these tools causes real harm—even if the images are fake.

At the center of it all is a bigger question: How do we protect people while still building new AI tools? Without clear AI ethical boundaries, DeepNude-style apps risk becoming “just part of the internet”—and that’s a dangerous path.

Who Uses These Tools and Why?

DeepNude-style apps attract all kinds of users. Some are just curious. Others act out of boredom or view it as a joke. But many use these fake nude generators to embarrass, control, or exploit their targets.

What makes it worse? These tools are easy to access. Many are free or cost very little. You’ll find them on shady websites, in downloadable apps, or through Telegram bots. Most don’t require registration, ID, or even an email. Just upload a photo—and within seconds, you’ve got a fake nude.

That simplicity makes them dangerous. Teens, trolls, and bad actors all have the same power to create nonconsensual content. And because many tools disguise themselves as AI demos or “just for fun” apps, users often don’t stop to think about the harm they’re causing.

But intent doesn’t change the result. If someone uses these tools out of curiosity or cruelty, the outcome is the same: someone’s photo gets misused. A fake image is shared without consent. And what seemed like a prank quickly became a form of digital abuse.

Real Cases of Abuse Involving DeepNude-Like Tools

The rise of nudification tools and AI-generated nudes has led to serious cases of image-based abuse. From celebrities to everyday people, victims are being targeted without consent—often at massive scale. These incidents reveal just how damaging and widespread this misuse has become.

1. Taylor Swift Deepfake Scandal (2024)

In early 2024, explicit AI-generated images of Taylor Swift spread across platforms like X (formerly Twitter), Instagram, Reddit, and Facebook. One tweet alone hit 45 million views before being taken down. The content was traced back to Telegram groups using AI tools like Microsoft Designer and evading filters through misspellings and prompt tricks. The backlash was swift, reigniting calls for stronger laws against nonconsensual deepfake content.

2. South Korea Telegram Abuse Network (2024)

In August 2024, reports exposed Telegram groups in South Korea where AI-generated sexual images of female teachers and students—including minors—were being shared. Some groups had more than 220,000 members and circulated both fake nudes and private information. Public outrage followed, leading to a new law criminalizing the possession or viewing of sexually explicit deepfake content, with jail time and fines for offenders.

3. Atrioc’s Deepfake Controversy (2023)

Twitch streamer Atrioc came under fire in 2023 after revealing, during a live stream, a browser tab showing AI-generated porn of female Twitch streamers. He admitted to buying deepfake content and apologized publicly. The controversy fueled broader debates about digital consent, content regulation, and the ethical responsibility of both users and platforms.

Can DeepNude Be Stopped or Blocked?

Stopping DeepNude and similar fake nude apps isn’t easy—but it’s not impossible. As awareness grows, platforms, lawmakers, and developers are stepping in to reduce harm and push back against nonconsensual AI-generated content.

Here are the main strategies being used to combat these tools:

- Platform bans

Sites like Reddit, Discord, and X (formerly Twitter) now ban AI-generated nudes shared without consent. Accounts that promote nudification tools or distribute fake nude images are suspended or removed. - AI deepfake detection tools

New software can spot signs of fake content by analyzing pixel patterns, lighting, and textures. These tools help flag AI-generated images before they spread too far. - Legal takedowns and enforcement

Victims and legal advocates are using privacy laws, copyright claims, and revenge porn statutes to take down fake nudes. In some cases, creators and sharers face legal consequences. - Education on digital consent

Nonprofits, schools, and advocacy groups are working to teach people—especially teens—about the risks of fake nudes, AI abuse, and the need for consent in digital spaces.

There’s no single solution to stop nudification apps. But by combining policy, detection tech, and public education, society can start limiting the damage and holding people accountable.

AI, Consent, and the Future of Nudification Tools

As artificial intelligence advances, the ethical stakes rise with it. Nudification tools like DeepNude highlight the dark side of innovation—where tech can strip away privacy and ignore consent in seconds. While AI offers massive potential across fields like healthcare, education, and business, it also opens the door to harmful misuse when left unchecked.

The future of AI will be shaped by the choices we make now. Will platforms and developers build with responsibility in mind? Or will convenience, curiosity, and viral appeal continue to drive abuse?

One thing is clear: consent must be at the heart of every digital tool we create. AI development without ethical boundaries leads to exploitation—not progress. To build a future where tech empowers rather than harms, developers, users, and platforms must treat privacy and accountability as non-negotiable.

FAQs About DeepNude and Fake Nude AI

How does DeepNude create fake nude images?

DeepNude uses artificial intelligence—specifically generative adversarial networks (GANs)—to predict and render what a person might look like without clothes. By analyzing a clothed photo, the app generates a fake nude image that appears realistic, despite being entirely fabricated.

Can you get in legal trouble for using DeepNude?

Yes, depending on your location, using DeepNude-style apps to create or share nonconsensual explicit content can fall under revenge porn laws, harassment, or privacy violations. Even if the images are fake, courts increasingly treat such behavior as a serious offense.

Are there tools that can detect if an image is fake?

Yes, AI detection tools are being developed to spot inconsistencies in lighting, shadows, and pixel patterns that reveal a fake image. These tools help platforms and law enforcement verify whether a photo was manipulated using nudification software.

Final Thoughts

DeepNude-style apps mark one of the most disturbing intersections of AI and privacy today. While they show how powerful artificial intelligence has become, they also reveal how easily that power can be misused. The ability to generate fake nude images without consent isn’t just a tech trick—it’s a direct violation of someone’s dignity, safety, and autonomy.

The harm goes beyond embarrassment. Victims face long-term damage, from mental health struggles to legal gray areas where justice is hard to find. And as AI tools become easier to use, the risk grows.

This is why developers, tech platforms, and policymakers must act now. We need clear ethical rules. We need accountability built into the systems. And we need AI to move in a direction that protects people, not exploits them.

Stopping apps like DeepNude is about more than one tool. It’s about deciding what kind of digital future we want—one where tech respects consent and helps people, or one where abuse is just a click away.

Disclaimer: The information provided herein is for informational purposes only. Your use of any of the sites listed in this article is subject to each site’s terms and conditions. Laws vary from state to state and over time. It is your sole responsibility to ensure the use of any sites or any of the services provided through the sites is compliant with your jurisdiction’s laws. The information provided herein shall not be used in any way to exchange money for sex.