YouTube rolled out a detection system that helps creators identify videos featuring artificial intelligence (AI)-generated copies of their face, the platform’s first major tool against unauthorized deepfakes.

The company began notifying eligible creators in the YouTube Partner Program via email on October 2. Access will expand to all Partner Program members over the coming months, according to YouTube.

Creators must verify their identity before using the tool. The process requires submitting a government-issued photo ID and recording a brief selfie video on a smartphone. YouTube uses this data to create a “face template” that scans new video uploads for potential matches.

The detection system works similarly to Content ID, which identifies copyrighted material. But instead of scanning for protected audio or video, it searches for a person’s facial likeness.

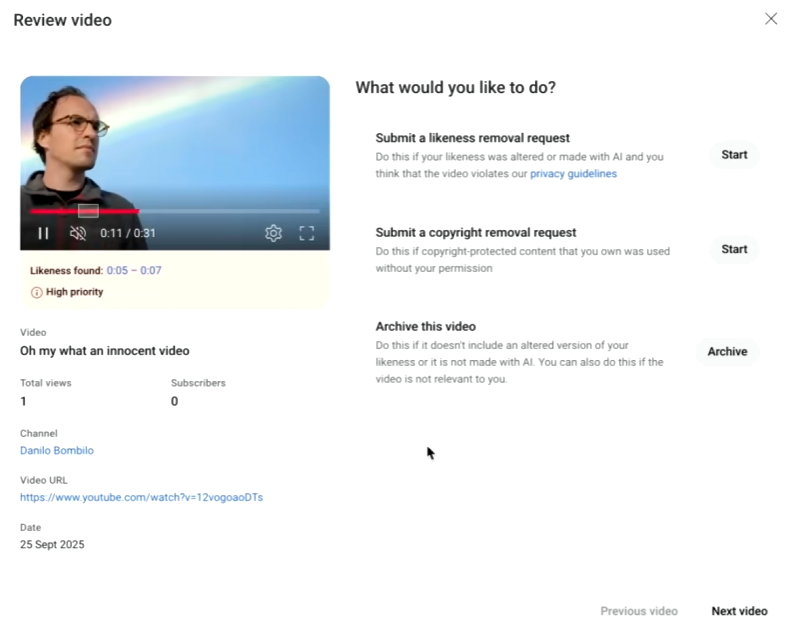

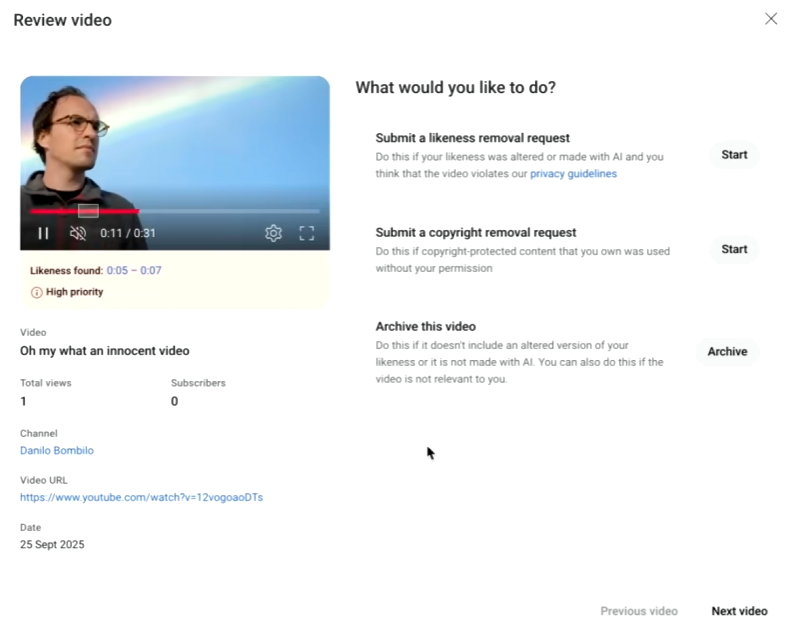

Flagged videos appear in a “For review” section within YouTube Studio’s Content Detection menu. Creators can then examine each footage and decide whether to submit a removal request, file a copyright claim, or archive the match if it’s not a violation.

Source: YouTube

YouTube doesn’t promise to remove every reported video. Human reviewers assess requests based on several factors, including whether the content is parody, how realistic it appears, and the context. A convincing fake showing someone endorsing a product or committing a crime is more likely to be taken down than satirical content.

“What this new technology does is scale that protection,” said Amjad Hanif, YouTube’s vice president of creator products, in an interview with Axios.

The tool emerged from a partnership with Creative Artists Agency that began in December 2024. High-profile creators, including MrBeast, Marques Brownlee, and Doctor Mik, tested the system during its pilot phase.

YouTube acknowledges the system may flag legitimate content. Creators might see short clips from their own videos that other channels used under fair use guidelines. The company describes the tool as an “experimental feature” still in limited beta.

The platform has taken other steps to address AI-generated content. Last March, YouTube began requiring creators to label uploads that include AI-generated or altered material. The company also developed a separate system for reporting AI-generated music that mimics an artist’s voice.

YouTube supports federal legislation called the NO FAKES Act, which would require platforms to act quickly on takedown requests for AI-generated likeness violations.

“For us, a responsible AI future needs two things: clear legal frameworks like NO FAKES, and the scalable technology — which we’re building — to actually enforce those principles on our platform,” Hanif said.

Creators can opt out of the detection system at any time. Scanning stops 24 hours after deactivation.